Inkeep MCP & RAG API

Power MCP Servers and AI Agents with your own data.

Key Takeaways

API Request provides strategic implementation guidance

API Response provides strategic implementation guidance

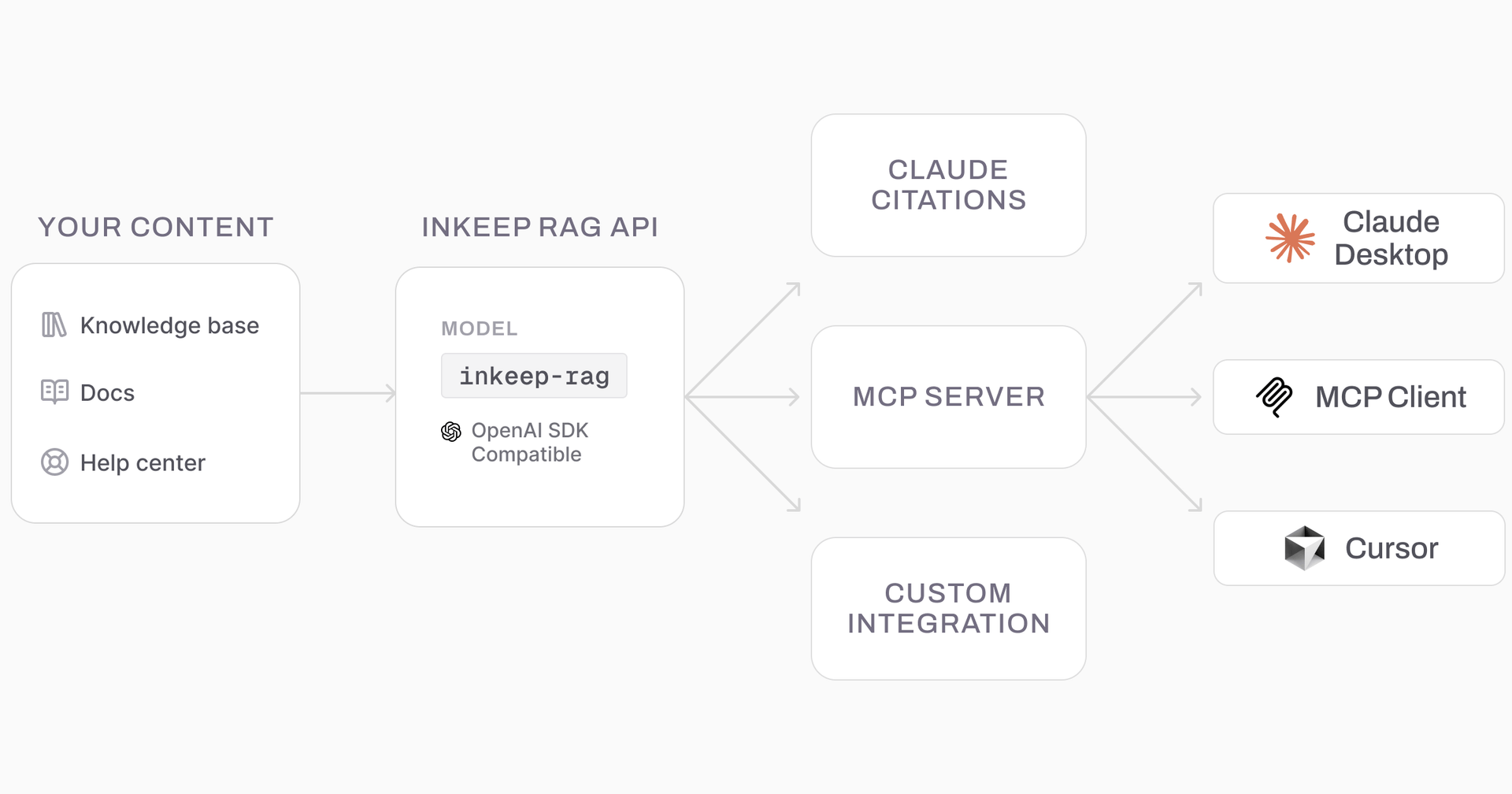

This week we launched a new API: inkeep-rag, which lets you query your docs, help center content, knowledge bases, and any other source ingested by Inkeep.

With this API, you can use your own content to power custom support agents, copilots, or custom workflows.

Let's see how to use it as part of an MCP Server, so we can connect it to Cursor or Anthropic's Claude Desktop App.

API Request

The inkeep-rag API takes in a query and outputs documents or chunks of content that can be passed directly to an LLM.

The API is exposed in the OpenAI chat completions format. The “query” is actually just a messages array, making it interoperable with the formats used by OpenAI, Anthropic, or other LLM providers. We can use any OpenAI compatible SDK to make the request.

python

The messages array can contain full conversations, system prompts, and even images. The Inkeep RAG service automatically handles any summarization or intermediary steps for finding the most relevant content for the conversation.

API Response

The inkeep-rag API responds with content containing an array of documents, each in the format of:

jsx

Using the content

This format extends Anthropic's Claude Citations, so can easily be used by Anthropic's Claude models and generally by any LLM or tool.

To call Claude directly:

python

Using it within an MCP Server

To use this RAG model with Cursor, Anthropic's Claude Desktop App, or other MCP compatible clients, use this template to get started.

You can customize the MCP Server with any business logic you like. As a basic example, we can create an MCP Tool that takes in a query and calls the Inkeep RAG API.

python

Getting started

As you can see - we lean on using formats and standards already common and established so it's easy to plug in Inkeep to any LLM application or use case.

To get started with our new RAG API, check out the open source repo to set up your own local MCP server powered by your own content.

Frequently Asked Questions

This guide provides practical steps and real-world examples that can be directly applied to your specific use case, with actionable frameworks for successful implementation.